Haptic Skill Transfer of High-DoF Manipulation Tasks

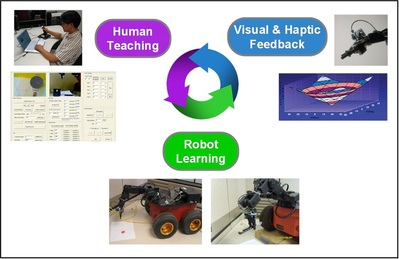

To further the depth of learning processes in human-robot interaction, the haptic modality is utilized as a mediator for both skill acquisition and skill translation. This research focuses on a coordinated haptic training architecture that is useful for transferring expertise in teleoperation-based manipulation in human-to-human or human-to-robot skill transfer. Robotic learning is more extensively incorporated to translate human skills into robotic knowledge, and the method to reuse this data with a haptic skill transfer process is investigated. This research aims to construct a reality-based haptic interaction system for knowledge transfer by linking an expert’s skill with robotic movement in real time. The benefits from this approach include (i) a representation of an expert’s knowledge into a more compact and general form by learning from a minimized set of training samples, and (ii) an increase in the capability of a novice user by coupling learned skills absorbed by a robotic system with haptic feedback. In order to evaluate these ideas and present the effectiveness of this paradigm, human handwriting is selected as the experiment of interest. For the learning algorithms, artificial neural network (ANN) and support-vector machine (SVM) were utilized and their performances were compared. For the evaluation of performance, a modified Longest Common Subsequence (LCSS) algorithm was implemented. Results showed that one or two experts’ samples are sufficient for the generation of haptic training knowledge, which can successfully recreate manipulation motion with a robotic system and transfer haptic forces to an untrained user with a haptic device. Also in the case of handwriting comparison, the similarity measures result in up to an 88% match even with a minimized set of training samples.

To further the depth of learning processes in human-robot interaction, the haptic modality is utilized as a mediator for both skill acquisition and skill translation. This research focuses on a coordinated haptic training architecture that is useful for transferring expertise in teleoperation-based manipulation in human-to-human or human-to-robot skill transfer. Robotic learning is more extensively incorporated to translate human skills into robotic knowledge, and the method to reuse this data with a haptic skill transfer process is investigated. This research aims to construct a reality-based haptic interaction system for knowledge transfer by linking an expert’s skill with robotic movement in real time. The benefits from this approach include (i) a representation of an expert’s knowledge into a more compact and general form by learning from a minimized set of training samples, and (ii) an increase in the capability of a novice user by coupling learned skills absorbed by a robotic system with haptic feedback. In order to evaluate these ideas and present the effectiveness of this paradigm, human handwriting is selected as the experiment of interest. For the learning algorithms, artificial neural network (ANN) and support-vector machine (SVM) were utilized and their performances were compared. For the evaluation of performance, a modified Longest Common Subsequence (LCSS) algorithm was implemented. Results showed that one or two experts’ samples are sufficient for the generation of haptic training knowledge, which can successfully recreate manipulation motion with a robotic system and transfer haptic forces to an untrained user with a haptic device. Also in the case of handwriting comparison, the similarity measures result in up to an 88% match even with a minimized set of training samples.