Rapid development in mobile and interactive computing has enriched our social network in diverse ways, enabling a person to connect to anyone over the world. However... what about people with disabilities? Can we advance current technology for assisting those individuals in better ways?

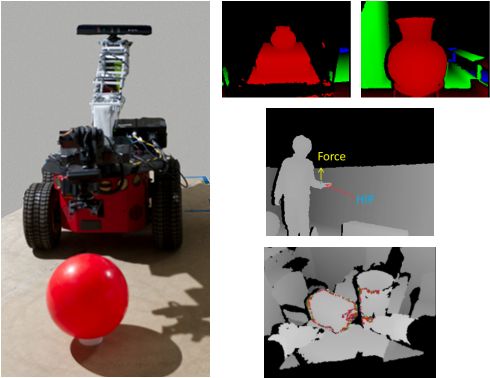

Currently, over 40-million individuals worldwide are classified as legally blind. For such individuals, the ability to perceive objects within the environment involves the use of non-visual cues, which typically requires direct contact with the world. To enable remote perception, in which a blind individual can perceive objects located at a distance, other means are needed to transform the environmental data set into a form for touch. To tackle this problem, I present a framework that integrates active depth perception and visual perception from heterogeneous vision sensors to enable real-time interactive haptic representation of the real world through a mobile manipulation robotic system. By integrating the robotic perception of a remote environment using multiple classes of sensors and transferring it to a human user through haptic environmental feedback, the user can increase one’s ability to interact with remote environments through the telepresence robot in real-time.

Although many assistive devices have been developed and utilized to aid in daily living, the most general assistive means for individuals with visual impairments are the walking cane and guide dogs. These assistive means are effective in assisting the user in navigating within an environment, however, the navigation space is limited to the proximal environment of the user. Thus, I wanted to develop a method for individuals with disabilities to increase the range of accessibility to a remote environment through robotic embodiment that enables tele-operation and tele-perception through multi-modal feedback, or "telepresence robots" in a simpler term.

Currently, over 40-million individuals worldwide are classified as legally blind. For such individuals, the ability to perceive objects within the environment involves the use of non-visual cues, which typically requires direct contact with the world. To enable remote perception, in which a blind individual can perceive objects located at a distance, other means are needed to transform the environmental data set into a form for touch. To tackle this problem, I present a framework that integrates active depth perception and visual perception from heterogeneous vision sensors to enable real-time interactive haptic representation of the real world through a mobile manipulation robotic system. By integrating the robotic perception of a remote environment using multiple classes of sensors and transferring it to a human user through haptic environmental feedback, the user can increase one’s ability to interact with remote environments through the telepresence robot in real-time.

Although many assistive devices have been developed and utilized to aid in daily living, the most general assistive means for individuals with visual impairments are the walking cane and guide dogs. These assistive means are effective in assisting the user in navigating within an environment, however, the navigation space is limited to the proximal environment of the user. Thus, I wanted to develop a method for individuals with disabilities to increase the range of accessibility to a remote environment through robotic embodiment that enables tele-operation and tele-perception through multi-modal feedback, or "telepresence robots" in a simpler term.

This is a short video clip during a pilot study with individuals

with VI on haptic exploration and telerobotic navigation.

with VI on haptic exploration and telerobotic navigation.